From sound to insight—visualizing audio data

- Leveraging the untapped potential in live and recorded audio data to uncover new opportunities for improving security surveillance.

Overview

The application captured audio data but provided no meaningful way to utilize or represent it beyond indicating that a recording existed.

My task was to uncover opportunities to surface and transform audio metadata into useful, user-friendly features. This included exploring ways to visualize, contextualize, and apply the data to enhance usability and deliver actionable insights.

Client

Johnson Controls

(Patented Invention)

Team

4 UX Designers, 2 Developers

and 1 Design Manager

Role

Lead UX Designer

Duration

February- June, 2024

Observations

A review of the application and its competitors quickly revealed opportunities to differentiate the product through audio functionality. I identified gaps in the market, including limited user interfaces for browsing, searching, and interpreting audio data. Additionally, most systems lacked robust tools for metadata extraction—such as sound classification—making raw audio difficult to analyze or act on.

No Visual Indication of Audio Presence

- Users are unaware whether audio exists for a given recording or live footage until they play the video.

No Representation of Audio Metadata

- Audio data is captured but not visualized or surfaced in a way that’s meaningful or interpretable.

- Audio and video streams are treated separately. There’s no unified timeline showing how audio aligns with visual events.

- Critical audio events that occur outside the camera’s frame are not surfaced or made actionable, despite being captured.

Lack of Audio Classification

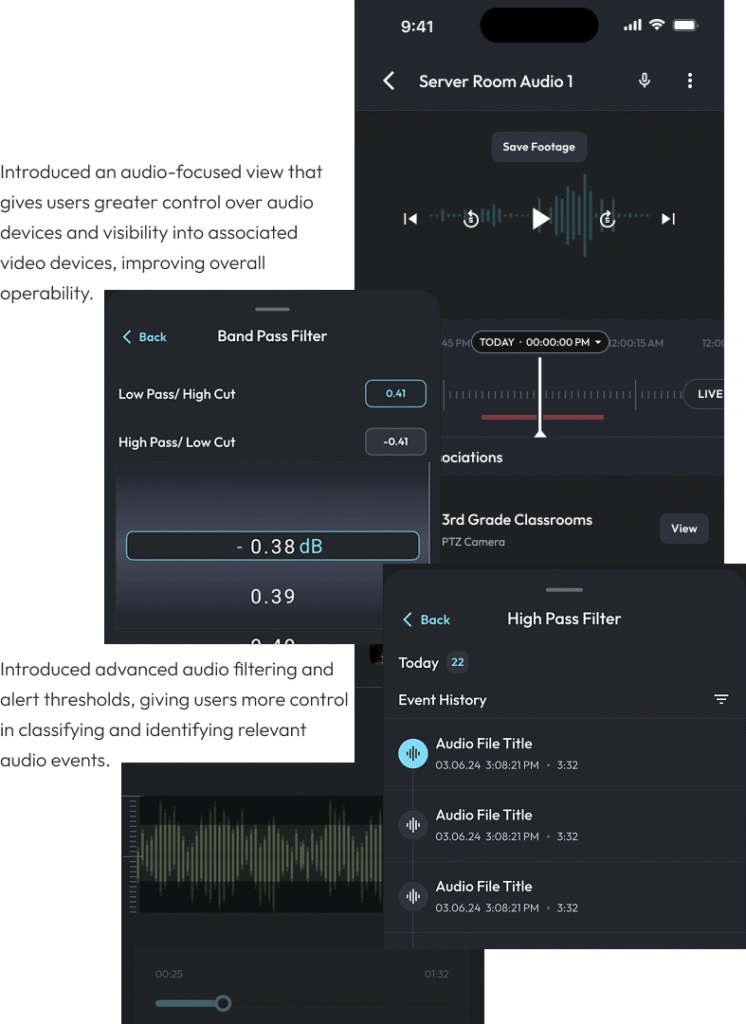

- The system cannot detect or label audio types (e.g., gun shots, screaming, alarms), which limits its usefulness for alerting or post-event analysis.

No Correlation Between Audio Sources and Events

- Audio from different devices can be routed through the NVR, but there’s no clear way to correlate specific audio sources to specific events or cameras.

Process

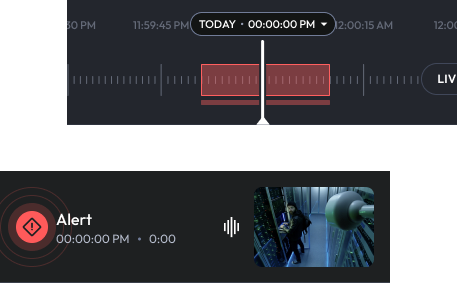

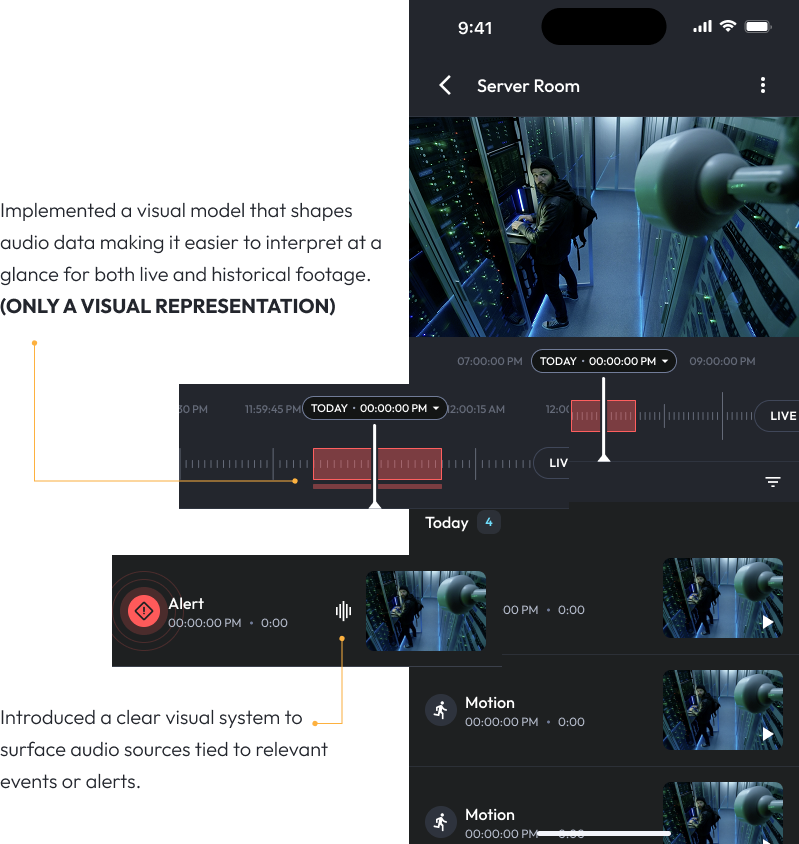

To address the lack of audio awareness in video footage, I conducted user interviews and usability tests that informed three design iterations, delivered in a phased rollout. I introduced a unified audio-visual timeline, audio presence indicators for live and historical events, and smart classification of critical audio cues. This solution significantly improved situational awareness and led to a patent filing supporting future innovation.

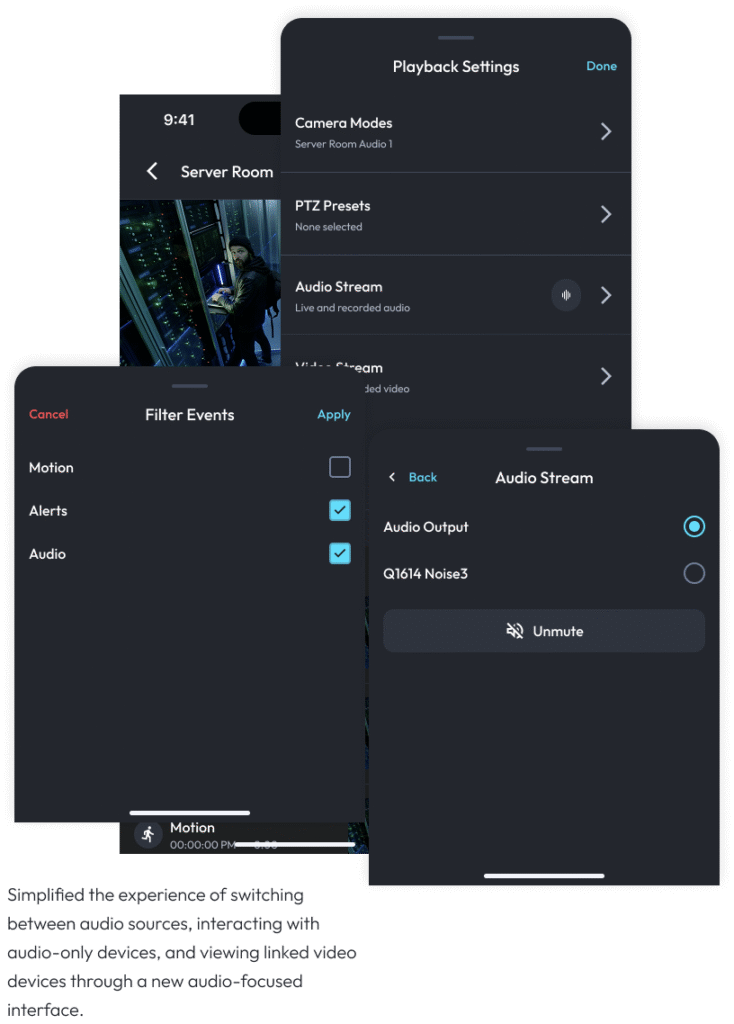

Additionally, this exploration uncovered further opportunities to enhance the user experience. As a result, we redesigned the Push-to-Talk (PTT) feature to support more intuitive two-way communication and streamlined the process of associating multiple devices with multiple audio sources, improving overall system usability.

The Solution

The following outlines several solutions I developed to tackle the identified challenges and drive product improvement.

The Result

This project highlighted the critical yet often overlooked role of audio in situational awareness. We learned that users need contextual, interpretable cues—not just raw data—to effectively engage with audio. Unifying audio and video into a single timeline improved mental models and event correlation, while flexible filtering and alert customization empowered users in high-stakes environments.

Small, focused UI changes unlocked complex functionality, and the work demonstrated how UX design can drive innovation and contribute directly to patentable product strategy.

42%

Reduction in time to identify relevant audio events during incident review

38%

Fewer false positives due to customizable alert thresholds and audio classification

61%

Improvement in successful device associations between audio and video sources

3x

Increase in user interaction with audio devices via the new audio-focused view